- Homepage

- About the Joint Centre

- Research Areas & Projects

- Researchers

- News & Events

- Open Positions

Automatic understanding and interpretation of video content 1 is a key enabling factor for a range of practical applications such as organizing and searching home videos or content-aware video advertising. For example, interpreting videos of “making a birthday cake” or “planting a tree” could provide effective means for advertising products in local grocery stores or garden centers.

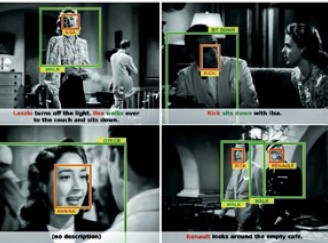

The goal of this work is to automatically generate annotations of complex dynamic events in video. We wish to deal with events involving multiple people interacting with each other, objects and the scene, for example people at a party in a house. The goal is to generate structured annotations going beyond simple tags. Examples include entire text sentences describing the video content as well as bounding boxes or segmentations spatially and temporally localizing the described objects and people in video. Such annotations will in turn open-up the possibility to organize and search video content using well-developed technology from e.g text search or natural language processing.

We build on the considerable progress in visual object, scene and human action recognition achieved in the last ten years, as well as the recent advances in large-scale machine learning that enable optimizing complex structured models using massive sets of data. In particular, we develop structured video representations of people, objects and scenes, able to describe their complex interactions as well as their long-term evolution in the dynamic scene.

To this end, we investigate different models of the video stream including: (i) designing explicit representations of scene geometry together with scene entities and their interactions as well as (ii) directly learning mid-level representations of spatio-temporal video content using dictionary learning or convolutional neural networks.

To train the developed models we design weakly-supervised learning methods making use of videos and the associated readily-available metadata such as text, speech or associated depth (in the case of 3D videos).

To enable accessing the massive amounts of available video data we also develop representations that allow for efficient extraction and indexing. We believe such models and methods are required to make a breakthrough in automatic understanding of dynamic video scènes.

2016

Communication dans un congrès

2015

Communication dans un congrès

2014

Article dans une revue

Communication dans un congrès

2013

Article dans une revue

2012

Communication dans un congrès

Rapport

2011

Communication dans un congrès

2009

Communication dans un congrès